Saturday, April 11, 2009

Friday, April 10, 2009

Finding Pages from Browser History

Friday, April 10, 2009

Finding Pages from Browser History

A new tool aims to make a Web browser's history more useful.

By Erica Naone

Credit: Technology Review

Web browsers remember the sites that they have visited in the past, but few people seem to use this information. Jing Jin, a graduate student at Carnegie Mellon University, has developed a new browser-history tool, which she and her colleagues developed after studying how people use their browser history. They demonstrated the prototype in a presentation this week at the Computer-Human Interaction (CHI 2009) Conference, in Boston.

"Despite the fact that bookmarks and Web histories are built into every browser, we found that people tend to either use search engines or reconstruct the path by which they got to a page in the first place," says Jason Hong, an assistant professor at Carnegie Mellon, who was involved in the research. "Most people either found Web history too hard to use or didn't even know that it existed."

The researchers tested users' ability to recall Web pages and found that URLs and textual descriptions (by which most browsers organize their history) weren't as easy to remember as colors or images collected from the Web pages themselves. So the researchers' tool--currently a plug-in for the Firefox browser--lets users browse images of websites that they have visited in the past, or type in search queries that find previously visited pages.

The researchers also used the new history tool to improve Web search, by adding thumbnails from browser history at the top of Google search results. The thumbnails were selected according to the search terms that the user entered into the search engine.

In testing, the researchers discovered that people could find the page they were looking for within about a minute on average using the prototype add-on, compared with an average of three minutes using the standard browser history. The user tests also showed that people were able to actually find a given old page more often with the prototype.

Eytan Adar, a PhD student at the University of Washington, is also researching ways to redesign the browser's history. He says that the current design doesn't match the way that people revisit pages. In collaboration with scientists from Microsoft Research, Adar found that people tend to visit Web pages in certain patterns. He says that it might be possible to split the history so that it treats these pages differently according to the user's patterns. For example, those pages that people revisit over and over in a short period could appear in a temporary "working queue," Adar says. The pages that they access regularly could be displayed in a more persistent list, while the pages that they revisit rarely might be accessed through a search function.

However, some experts argue that the browser history is less broken than these researchers suggest. Larry Constantine, a usability expert and professor at the University of Madeira, in Portugal, notes that some browsers already make sophisticated and hidden use of history information. For example, the Firefox 3 browser is good at guessing URLs from a user's history based on keywords entered in the address bar. Whether the browser history is useful, he says, "depends a lot on which version of the browser people are using and on their personal habits."

Jared Spool, founding principal of User Interface Engineering, a consulting firm based in North Andover, MA, says that people tend to revisit pages by retracing the way that they found them in the first place. "It's sort of a cliché, but we are creatures of habit," he says.

If the first path to the information worked well and quickly, it's not natural to seek out a second path, Spool says. He's not sure that most users would change their behavior even if the history were better designed, but he sees potential for using it for specific purposes. For example, he says, authors might want to use an improved browser-history tool to revisit research resources, or a company's employees might use such a tool to help them navigate a poorly organized intranet, which might contain material that's harder to search than is content on the wider Internet.

Spool says that the ideas behind the prototype history tool are likely to filter into consumer products in a very different form. "What we're seeing here is the first piece of the pollination process," he says.

Indeed, the Carnegie Mellon researchers point out that browsers are already starting to explore alternate ways to use data from people's browsing habits. For example, Google'sChrome browser features a "speed-dial" page when a user opens a new tab that shows thumbnails of frequently visited websites. Carnegie Mellon's Hong notes that a redesign of the browser's history could be particularly helpful for less Web-savvy users, who might have trouble figuring out the steps of the path that they originally took to a piece of information.

Thursday, April 09, 2009

Why IT Solutions Are Never Simple

Why IT Solutions Are Never Simple

10:37 AM Wednesday April 1, 2009

Tags:IT management, Information & technology, Technology

Without concerted effort, what was once neat and tidy becomes marred and messy. Just finding something in the garage feels like an archaeological expedition. Periodically, when someone dies, or relocates, or becomes disgusted, there's a whirlwind of activity to purge and reorganize. This cathartic experience is followed by a brief period of exhilaration, until time passes and entropy exerts itself once again.

So of course the airlines didn't intend to build "multiple old computer systems that don't share information well." When these systems were initially constructed (in the 60s and 70s), they were neat and tidy. Application requirements were defined from the point of view of a department and the needs of the people within it. The approach to programming reflected a simple and static world where it was the norm to embed data and business rules together with the logic necessary to support a business function — for example, to book and manage reservations. No one conceived that customers would book their own travel, that airlines would merge and spin off, that competing airlines would sell seats through code share agreements, or that competition would become so fierce as to necessitate greeting them by name and remembering their favorite drink.

To respond to these demands in a timely manner, IT did what we all do. They packed as much as they could in the existing "application" garages. When it became impossible to enter them without breaking something, they built new ones to store additional, but redundant, data, business rules, and logic. In an attempt to coordinate these applications to support business processes, they built a myriad of point-to-point interfaces between the applications. As a result of these seemingly efficient but short-sighted approaches, the systems architecture of the average 20+ year company looks something like this (aptly named, the "scare" diagram):

Because of this complexity, many companies don't have a definitive understanding of their customers, products, and performance and have difficulty modifying business processes in response to new opportunities and competitive realities. Furthermore, they devote the lion's share of their IT spend to maintaining existing systems rather than innovating new capabilities.

This isn't new news, of course. During the 1990's, we started to realize that IT systems often inhibited rather than enabled change. Since then, IT and business leaders have been working hard to increase agility by replacing systems and using new approaches to promote integration and commonality. Along the way, we have learned that:

- Across-the-board "scrape and rebuild" of systems usually doesn't make sense because often the gain isn't worth the pain. This approach is like knocking down your garage and throwing out everything in it. There's a lot of good stuff in your existing applications and there is no guarantee that the new systems will be that much better, less complex, or cheaper than the old ones.

- Hiding existing systems complexity using a "layer and leave" approach makes it easier to use and integrate existing systems, but doesn't reduce the costs of supporting inflexible and redundant systems. This approach is like hiring a garage "concierge" to find things and put them away. Unfortunately, you have to pay for the concierge service as well as the costs of maintaining the garages.

- The best way to manage complexity is to "clean as you go". This is a combination of the two approaches — implemented on a project-by-project basis. Each project is defined in a way that moves the enterprise closer to the desired "to be" architecture. Using our garage analogy, to move something in, one or two things must be reorganized or moved out. This approach includes layering, but also extracting critical data and functionality out from applications and rebuilding them so that they can be managed as an enterprise asset.

IT isn't alone in the need to simplify. As Rosabeth Moss Kanter pointed out, "Companies sow the seeds of their own decline in adding too many things — product variations, business units, independent subsidiaries — without integrating them." Keep in mind that, since IT architectures mirror the inherent complexity of the businesses that they support, it's impossible to have a truly agile and cost-effective technical architecture without simplified business architecture.

It's hard to say "no" to the extra product line, merger, reporting package or, for that matter, bicycle. Simplicity's just not that simple. How are you doing getting there?

Yuan trade settlement to start in five Chinese cities

Updated: 2009-04-09 07:37

Five major trading cities have got the nod from the central government to use the yuan in overseas trade settlement - seen as one more step in China's recent moves to expand the use of its currency globally.

| ||||

The move is aimed at reducing the risk from exchange rate fluctuations and giving impetus to declining overseas trade, according to a statement posted on the government website.

"The trial is the latest move toward making the yuan an international currency," Huang Weiping, professor of economics at Renmin University of China, said. "The prospect of a weaker US dollar is making the transition more imperative for China."

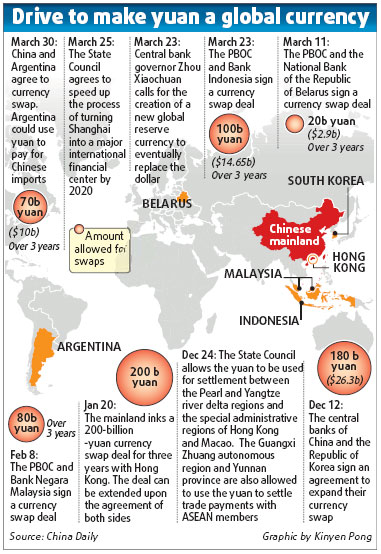

The mainland is trying to promote the use of the yuan among trade partners and, in the past four months, has signed 650 billion yuan (US95 billion) worth of swap agreements with Argentina, Indonesia, South Korea, Malaysia, Belarus and the Hong Kong Special Administrative Region. The agreements allow them to use their yuan reserves to directly trade with the Chinese mainland within a set limit in volume.

Stephen Green, head of China Research of Standard Chartered Bank, said the swap deals would help encourage the use of the yuan as the currency of choice for international trade.

China now uses the US dollar to settle most of its international trade but the drastic swings in the greenback have become a risk for Chinese exporters in recent years.

Dong Xian'an, economist with China Southwest Securities, said: "For many exporters, the dollar's fluctuation is a serious concern. The ability to settle trade in the yuan would reduce such risk," he said.

Chen Xianbin, chairman of Guangxi Sanhuan Enterprise Group, told China Daily that his company lost more than 150 million yuan in the past three years from international trade due to the exchange rate changes between the yuan and the greenback. Chen's company, a ceramic tableware exporter, relies on Southeast Asian markets for 15 percent of total sales.

China's foreign trade has been on a continuous decline amid the current global financial crisis. Exports plunged 25.7 percent year on year in February, one of the sharpest falls ever, while imports dived 24.1 percent.

Analysts said the US Federal Reserve's decision to buy long-term Treasuries, which means printing new money, may also lead to a depreciation of the US dollar. That is also one reason for China to reduce the use of the dollar in trade so that the value of its US1.95 trillion foreign exchange reserves does not depreciate.

Zhou Xiaochuan, the central bank governor, said last month that in the long run, it may be ideal to replace the dollar with a new international reserve currency under the mechanism of the International Monetary Fund.

Wednesday, April 08, 2009

The G-20’s Uncertain Roadmap

The G-20’s Uncertain Roadmap

| Spot the difference: US president has moved to the second row - symbolic change showing a new world? | |

LONDON: The prime achievement of this month’s G20 summit, in long-term, historical terms, may turn out to be that it took place at all and ended with sufficient harmony for a second meeting to be planned. That is not to damn the proceedings in London with faint praise. By bringing China, India, Brazil and Saudi Arabia together with the leaders of the developed world to discuss the economic crisis, the summit provided a vivid manifestation of how globalised the world has become. The G7 and G8 may continue to meet, but a wider forum has been created which reflects the way in which the world has altered.

That said, the world is not so much in need of long-term historical significance as for more immediate measures to cope with a recession which grows more threatening by the day. On this count, the London summit fell decidedly short. Though the line-up for the final photograph – with President Barack Obama moving to the back row - may have illustrated the widening of the decision-making process, the proceedings showed all too clearly how national and regional divisions stand in the way of effective global action.

Despite some pre-summit grandstanding by President Nicolas Sarkozy of France threatening to leave if he did not get his way, there was never a serious threat of a breakdown; the meeting was too important for that. Nonetheless, a more serious and fundamental flaw was evident which will have to be overcome if real progress is to be made at future G20 meetings. The summit showed little readiness on anybody’s part to budge from prior positions and little sign of a readiness to compromise or to get to grips with basic issues. If that does not change, the G20 will be able to play only a marginal role as individual member countries, and blocks such as the European Union, pursue their own paths.

The fundamentally destabilizing structural divide between the surplus exporting nations and the deficit importing countries was not addressed. While economic stringency may be obliging Americans to spend less, the shifting away from the Clinton and Bush era economic model is still a distant prospect as the US stimulus program aims to boost incomes and, thereby, consumption. For their part, Germany, China and Japan see their road to recovery as lying through the old model - a revival in exports. Chancellor Angela Merkel says her country does not want to alter its reliance on exports while China has been rolling out tax relief for export industries under strain, from steel to textiles. Between them the three biggest exporting nations run an annual surplus of $835 billion which has to be matched by spending by the deficit nations.

The $1 trillion pledged to the IMF, the World Bank and other international bodies was welcome, but well short of what is needed and remains a general commitment with no fulfillment date attached. President Obama may have wowed public opinion on his visits to London, Strasbourg, and Prague, but Europe’s leaders (with the exception of the fence-sitting British) were not swayed when it came to joining the United States and China in stepping up stimulus measures. For the Europeans, who point to their welfare states as protecting their citizens from the downturn, tighter regulation and a drive against tax havens was more important than pumping in more money and aggravating budget deficits – despite arguments that getting out of the present hole is more important than constructing machinery to prevent similar outcome in the future.

As Prime Minister Gordon Brown and others insisted, the summit was only an initial encounter. Much more work and many meetings will be needed to work out solutions to the crisis. The urgency of the fast deteriorating situation, though, leaves no time for such exercise.

Away from the cheering European crowds, President Obama faces massive challenges as US unemployment hits a 25-year high and Congress estimates this year’s federal deficit at $1.8 trillion. The OECD predicts a 4.3 per cent contraction in advanced economies this year followed by stagnation in 2010. Growth in the Eurozone is forecast to drop by 2 per cent this year. Eastern Europe is in maximum danger. Exports from China, India and South Korea fell by more than 20 per cent in February over 2008. Nobody knows how many toxic assets remain hidden, but Western banks are in no condition (or state of mind) to fuel expansion – a study by Morgan Stanley puts the balance sheet shrinkage at 15 leading banks at $3.6 trillion with another $2 trillion to come.

There was, of course, a commitment to free trade in London – anything else would have been unthinkable. But, with its report that 17 of the 20 countries represented had enacted protectionist measures, the World Bank showed just how fallible such promises can be.. From the ‘Buy American’ program to President Sarkozy’s talk of repatriating car manufacturing by French companies from Eastern Europe, politicians all too easily pander to real or imagined political pressures. Mr. Brown’s spin doctors may have come up with a reassuring gloss on his earlier promise to ‘create British jobs for British workers’ but the visceral appeal to nationalism was unmistakable.

What makes the current crisis all the more challenging is that it is taking place at a time amid a shift in the balance of the world economy. If big emerging economies, notably China and India, have been hard hit by their exposure to the contraction of global demand, the London summit was fresh confirmation of globalisation’s momentum since China joined the World Trade Organization in 2001. The problem is that nobody has a clear vision of how the two parts of the global economy – the West plus Japan, on the one hand, and the BRIC nations (Brazil, Russia, India and China) on the other – can better fit together in more than a simple trading relationship.

This quandary, addressed only by implication in London, concerns China most of all. The last seven years not only propelled the People’s Republic into the position of the third largest global economy but also turned it from a country which, following the advice of the patriarch Deng Xiaoping, grew richer but kept quiet into a power that is ready to make its voice heard. So, before the summit, Beijing’s leadership lambasted the Western financial system, talked about Special Drawing Rights (SDRs) held by the IMF as an alternative to the dollar and admonished Washington to set its house in better order.

However, for all its expressions of concerns about its dollar holdings, there may be little China can do to lessen its symbiotic relationship with the US, at least in the short term. Still, it has gained a voice which will not be muffled and is likely to be followed by other big emerging economies represented at the summit. A page has been turned which recognizes the scale and scope of globalisation. Now, the urgent task is to put more substance into the new world forum before the strength of the recession and the play of national interests block the emergence of a new mechanism that can respond to the global challenge.

Jonathan Fenby’s latest book, the Penguin History of Modern China: The Fall and Rise of a Great Power, 1850-2009, has just appeared in paperback.

Rights:

© 2009 Yale Center for the Study of Globalization

Talking rubbish

A special report on waste

Talking rubbish

Feb 26th 2009

From The Economist print edition

Environmental worries have transformed the waste industry, says Edward McBride (interviewed here). But governments’ policies remain largely incoherent

THE stretch of the Pacific between Hawaii and California is virtually empty. There are no islands, no shipping lanes, no human presence for thousands of miles—just sea, sky and rubbish. The prevailing currents cause flotsam from around the world to accumulate in a vast becalmed patch of ocean. In places, there are a million pieces of plastic per square kilometre. That can mean as much as 112 times more plastic than plankton, the first link in the marine food chain. All this adds up to perhaps 100m tonnes of floating garbage, and more is arriving every day.

Wherever people have been—and some places where they have not—they have left waste behind. Litter lines the world’s roads; dumps dot the landscape; slurry and sewage slosh into rivers and streams. Up above, thousands of fragments of defunct spacecraft careen through space, and occasionally more debris is produced by collisions such as the one that destroyed an American satellite in mid-February. Ken Noguchi, a Japanese mountaineer, estimates that he has collected nine tonnes of rubbish from the slopes of Mount Everest during five clean-up expeditions. There is still plenty left.

The average Westerner produces over 500kg of municipal waste a year—and that is only the most obvious portion of the rich world’s discards. In Britain, for example, municipal waste from households and businesses makes up just 24% of the total (see chart 1). In addition, both developed and developing countries generate vast quantities of construction and demolition debris, industrial effluent, mine tailings, sewage residue and agricultural waste. Extracting enough gold to make a typical wedding ring, for example, can generate three tonnes of mining waste.

Out of sight, out of mind

Rubbish may be universal, but it is little studied and poorly understood. Nobody knows how much of it the world generates or what it does with it. In many rich countries, and most poor ones, only the patchiest of records are kept. That may be understandable: by definition, waste is something its owner no longer wants or takes much interest in.

Ignorance spawns scares, such as the fuss surrounding New York’s infamous garbage barge, which in 1987 sailed the Atlantic for six months in search of a place to dump its load, giving many Americans the false impression that their country’s landfills had run out of space. It also makes it hard to draw up sensible policies: just think of the endless debate about whether recycling is the only way to save the planet—or an expensive waste of time.

Rubbish can cause all sorts of problems. It often stinks, attracts vermin and creates eyesores. More seriously, it can release harmful chemicals into the soil and water when dumped, or into the air when burned. It is the source of almost 4% of the world’s greenhouse gases, mostly in the form of methane from rotting food—and that does not include all the methane generated by animal slurry and other farm waste. And then there are some really nasty forms of industrial waste, such as spent nuclear fuel, for which no universally accepted disposal methods have thus far been developed.

Yet many also see waste as an opportunity. Getting rid of it all has become a huge global business. Rich countries spend some $120 billion a year disposing of their municipal waste alone and another $150 billion on industrial waste, according to CyclOpe, a French research institute. The amount of waste that countries produce tends to grow in tandem with their economies, and especially with the rate of urbanisation. So waste firms see a rich future in places such as China, India and Brazil, which at present spend only about $5 billion a year collecting and treating their municipal waste.

Waste also presents an opportunity in a grander sense: as a potential resource. Much of it is already burned to generate energy. Clever new technologies to turn it into fertiliser or chemicals or fuel are being developed all the time. Visionaries see a future in which things like household rubbish and pig slurry will provide the fuel for cars and homes, doing away with the need for dirty fossil fuels. Others imagine a world without waste, with rubbish being routinely recycled. As Bruce Parker, the head of the National Solid Wastes Management Association (NSWMA), an American industry group, puts it, “Why fish bodies out of the river when you can stop them jumping off the bridge?”

Until last summer such views were spreading quickly. Entrepreneurs were queuing up to scour rubbish for anything that could be recycled. There was even talk of mining old landfills to extract steel and aluminium cans. And waste that could not be recycled should at least be used to generate energy, the evangelists argued. A brave new wasteless world seemed nigh.

But since then plummeting prices for virgin paper, plastic and fuels, and hence also for the waste that substitutes for them, have put an end to such visions. Many of the recycling firms that had argued rubbish was on the way out now say that unless they are given financial help, they themselves will disappear.

Subsidies are a bad idea. Governments have a role to play in the business of waste management, but it is a regulatory and supervisory one. They should oblige people who create waste to clean up after themselves and ideally ensure that the price of any product reflects the cost of disposing of it safely. That would help to signal which items are hardest to get rid of, giving consumers an incentive to buy goods that create less waste in the first place.

That may sound simple enough, but governments seldom get the rules right. In poorer countries they often have no rules at all, or if they have them they fail to enforce them. In rich countries they are often inconsistent: too strict about some sorts of waste and worryingly lax about others. They are also prone to imposing arbitrary targets and taxes. California, for example, wants to recycle all its trash not because it necessarily makes environmental or economic sense but because the goal of “zero waste” sounds politically attractive. Britain, meanwhile, has started taxing landfills so heavily that local officials, desperate to find an alternative, are investing in all manner of unproven waste-processing technologies.

As for recycling, it is useless to urge people to salvage stuff for which there are no buyers. If firms are passing up easy opportunities to reduce greenhouse-gas emissions by re-using waste, then governments have set the price of emissions too low. They would do better to deal with that problem directly than to try to regulate away the repercussions. At the very least, governments should make sure there are markets for the materials they want collected.

This special report will argue that, by and large, waste is being better managed than it was. The industry that deals with it is becoming more efficient, the technologies are getting more effective and the pollution it causes is being controlled more tightly. In some places less waste is being created in the first place. But progress is slow because the politicians who are trying to influence what we discard and what we keep often make a mess of it.

Where governments tax cigarettes the most

Up in smoke

Apr 7th 2009

From Economist.com

Where governments tax cigarettes the most

ON APRIL 1st America's government announced a big increase in taxes on cigarettes. Federal taxes leapt from 39 cents to $1.01 a packet. American smokers also pay state taxes ranging from 17 cents in Missouri to a whopping $2.75 in New York. Anti-smoking campaigners argue that higher taxes encourage smokers to quit and deter others from taking up the habit. But high taxes do not always mean expensive cigarettes. In Estonia and Slovakia, where more than 90% of the price of cigarettes goes in taxes, a packet of 20 Marlboros costs $2.22 and $2.72 respectively. In some countries tobacco companies decide to cut profits to ensure that people keep puffing. It may work. In Estonia half the population smoke; in Slovakia 42% like a crafty puff.

A familiar-sounding industry spat breaks out over standards

Computing

Clash of the clouds

Apr 2nd 2009

From The Economist print edition

A familiar-sounding industry spat breaks out over standards

CLOUD computing may be the next big thing, but its politics are as old as the mainframe. Geeks from the early years of the information-technology (IT) industry would have had no difficulty deconstructing a quarrel that has broken out among IT’s modern giants. At issue is an “Open Cloud Manifesto”, which was published on March 30th.

Even before that date, accusations were flying around online. What caused the controversy was not so much the content, which is vague enough for almost everyone to agree with it. The “manifesto” essentially calls for computing firms not to fall back on bad old habits by trying to lock in customers as computing becomes a utility, generated somewhere on the network (“in the cloud”) and supplied as a service. Since there will be many different computing clouds, the manifesto points out, customers should be able to move their data and applications easily from one to another, and “open” standards, not controlled by one company, should be used whenever possible.

Although most companies would agree on the need for openness in theory, there is room for disagreement in practice about the timing. Cloud computing is in its early days. Many technical problems have yet to be solved, and the industry has still not even settled on a definition of cloud computing. Agreeing on principles for openness and perhaps even standards at this stage would benefit some firms and hurt others. It would create opportunities for latecomers and for new start-ups, and make life easier for firms who help companies stitch together their IT systems. But it would also rein in those firms that have already built cloud-computing businesses, often using proprietary technologies developed to solve particular problems. Arguably, nailing everything down too early may also hamper innovation.

It is hardly surprising that IBM is the main force behind the manifesto. The technology giant has long been a champion of open standards—not least, many say, because it makes most of its money from its huge services arm. Among the nearly 100 supporting firms are many start-ups, including Cast Iron, Engine Yard and RightScale. Others in favour include Cisco, EMC and SAP. These heavyweights support openness because widespread adoption of cloud computing would expand the market for their products.

Just as predictably, the leaders in cloud computing are absent from the list of supporters: Amazon, an online retailer that has successfully branched out into computing services; Google, which is not only a huge cloud unto itself but has built a cloud-computing platform for use by others; Salesforce.com, the biggest provider of software-as-a-service; and Microsoft. Indeed, it was an executive at the world’s biggest software firm, Steven Martin, who first leaked the manifesto, complaining that it had been drawn up in secret. “It appears to us that one company, or just a few companies, would prefer to control the evolution of cloud computing,” he wrote in a blog.

Things have now calmed down a bit. Supporters and opponents of the manifesto met at a conference in New York this week and agreed “on a shared goal to promote the use and awareness of open and interoperable cloud computing”, as one participant put it. But if history is any guide the controversy is sure to flare up again once cloud computing really takes off. There could even be an all-out standards war. But the row has at least ensured that the question of openness in the cloud has received a lot of attention, observes Bob Sutor, IBM’s point man for standards: “People have been put on notice that they can get locked into a cloud.”

Tuesday, April 07, 2009

How the Internet Got Its Rules

How the Internet Got Its Rules

Bethesda, Md.

| ||

Brett Yasko |

TODAY is an important date in the history of the Internet: the 40th anniversary of what is known as the Request for Comments. Outside the technical community, not many people know about the R.F.C.’s, but these humble documents shape the Internet’s inner workings and have played a significant role in its success.

When the R.F.C.’s were born, there wasn’t a World Wide Web. Even by the end of 1969, there was just a rudimentary network linking four computers at four research centers: the University of California, Los Angeles; the Stanford Research Institute; the University of California, Santa Barbara; and the University of Utah in Salt Lake City. The government financed the network and the hundred or fewer computer scientists who used it. It was such a small community that we all got to know one another.

A great deal of deliberation and planning had gone into the network’s underlying technology, but no one had given a lot of thought to what we would actually do with it. So, in August 1968, a handful of graduate students and staff members from the four sites began meeting intermittently, in person, to try to figure it out. (I was lucky enough to be one of the U.C.L.A. students included in these wide-ranging discussions.) It wasn’t until the next spring that we realized we should start writing down our thoughts. We thought maybe we’d put together a few temporary, informal memos on network protocols, the rules by which computers exchange information. I offered to organize our early notes.

What was supposed to be a simple chore turned out to be a nerve-racking project. Our intent was only to encourage others to chime in, but I worried we might sound as though we were making official decisions or asserting authority. In my mind, I was inciting the wrath of some prestigious professor at some phantom East Coast establishment. I was actually losing sleep over the whole thing, and when I finally tackled my first memo, which dealt with basic communication between two computers, it was in the wee hours of the morning. I had to work in a bathroom so as not to disturb the friends I was staying with, who were all asleep.

Still fearful of sounding presumptuous, I labeled the note a “Request for Comments.” R.F.C. 1, written 40 years ago today, left many questions unanswered, and soon became obsolete. But the R.F.C.’s themselves took root and flourished. They became the formal method of publishing Internet protocol standards, and today there are more than 5,000, all readily available online.

But we started writing these notes before we had e-mail, or even before the network was really working, so we wrote our visions for the future on paper and sent them around via the postal service. We’d mail each research group one printout and they’d have to photocopy more themselves.

The early R.F.C.’s ranged from grand visions to mundane details, although the latter quickly became the most common. Less important than the content of those first documents was that they were available free of charge and anyone could write one. Instead of authority-based decision-making, we relied on a process we called “rough consensus and running code.” Everyone was welcome to propose ideas, and if enough people liked it and used it, the design became a standard.

After all, everyone understood there was a practical value in choosing to do the same task in the same way. For example, if we wanted to move a file from one machine to another, and if you were to design the process one way, and I was to design it another, then anyone who wanted to talk to both of us would have to employ two distinct ways of doing the same thing. So there was plenty of natural pressure to avoid such hassles. It probably helped that in those days we avoided patents and other restrictions; without any financial incentive to control the protocols, it was much easier to reach agreement.

This was the ultimate in openness in technical design and that culture of open processes was essential in enabling the Internet to grow and evolve as spectacularly as it has. In fact, we probably wouldn’t have the Web without it. When CERN physicists wanted to publish a lot of information in a way that people could easily get to it and add to it, they simply built and tested their ideas. Because of the groundwork we’d laid in the R.F.C.’s, they did not have to ask permission, or make any changes to the core operations of the Internet. Others soon copied them — hundreds of thousands of computer users, then hundreds of millions, creating and sharing content and technology. That’s the Web.

Put another way, we always tried to design each new protocol to be both useful in its own right and a building block available to others. We did not think of protocols as finished products, and we deliberately exposed the internal architecture to make it easy for others to gain a foothold. This was the antithesis of the attitude of the old telephone networks, which actively discouraged any additions or uses they had not sanctioned.

Of course, the process for both publishing ideas and for choosing standards eventually became more formal. Our loose, unnamed meetings grew larger and semi-organized into what we called the Network Working Group. In the four decades since, that group evolved and transformed a couple of times and is now the Internet Engineering Task Force. It has some hierarchy and formality but not much, and it remains free and accessible to anyone.

The R.F.C.’s have grown up, too. They really aren’t requests for comments anymore because they are published only after a lot of vetting. But the culture that was built up in the beginning has continued to play a strong role in keeping things more open than they might have been. Ideas are accepted and sorted on their merits, with as many ideas rejected by peers as are accepted.

As we rebuild our economy, I do hope we keep in mind the value of openness, especially in industries that have rarely had it. Whether it’s in health care reform or energy innovation, the largest payoffs will come not from what the stimulus package pays for directly, but from the huge vistas we open up for others to explore.

I was reminded of the power and vitality of the R.F.C.’s when I made my first trip to Bangalore, India, 15 years ago. I was invited to give a talk at the Indian Institute of Science, and as part of the visit I was introduced to a student who had built a fairly complex software system. Impressed, I asked where he had learned to do so much. He simply said, “I downloaded the R.F.C.’s and read them.”

Stephen D. Crocker is the chief executive of a company that develops information-sharing technology.What Are the Odds?

What Are the Odds?

Predictions are difficult in an unstable economy, but there are still ways to turn your material forecast into more than an educated guess.

Even when business is booming, it can be tough to predict what twists and turns might affect key variables to your bottom line, such as product demand and commodities prices. The prospect seems even more unlikely in today's economy, evidenced by the numerous companies that have simply declined to provide sales or revenue guidance to investors in recent months. Surrounded by such uncertainty, attempts to make predictions about next week, much less the next quarter, can feel like efforts in futility. Along with sales figures, most companies have found that their ability to forecast demand, and subsequently much of their material needs, also has become severely diminished.

Nonetheless, manufacturers still need to figure out ways to make do. For example, simply refining and having a better understanding of their own business needs can help generate realistic forecasts that could provide just enough certainty to make it through these tough times, says Anne Omrod, CEO of John Galt Solutions, a provider of forecasting solutions.

"There's no doubt that manufacturers are having a difficult time forecasting their material needs in today's economic climate," Omrod says. "But because it's harder, it has become even more important to have good processes in place. Much of the emphasis focuses on finished goods, but we're also seeing a lot of companies who need to translate that finished goods forecast back toward how much material they are buying."

Often, the risks most companies face fall into two key categories. The first relates to demand planning, in terms of how well they understand their customers and how their needs are changing. Demand plans then feed into material needs, which should focus on having a good understanding of all of their product lifecycles. The challenging part, explains Omrod, is that both categories are currently being thrown for a loop.

"In most cases past product lifecycles are being altered significantly," she explains. "So what a company did a year ago probably won't help them determine what they're going to need a month from now. No one is sure what new customers they might get, or more importantly, which ones they might lose. It creates a lot of variability that wasn't there before."

Of course, dramatic cost-cutting measures have become one of the more popular responses. In terms of buying materials, cuts have moved to a "lower level" of forecasting which target not only typical inventory controls on finished goods, but goes deeper into the process to find areas in which the number of units purchased for a particular product can be reduced.

"Through this process companies often find they can get away with buying five units of something instead of 10," Omrod says. "Then once they have confidence in the forecast and can increase its accuracy, their inventory levels and safety stock can be fine-tuned. This offers more control over inventory and prevents some of the twists and turns that tend to frustrate buyers."

Understanding what level of safety stock is required for each item is a good place to start, notes Omrod. In many cases, software automation plays a large role in the process, better equipping companies to evaluate safety stock levels and planned inventory. It also introduces at least some element of stability to a process subject to market swings that are becoming more and more unpredictable.

China's Competition for Capacity

China's Competition for Capacity

Current realities of upstream supplier capacity in China influence downstream operations and total cost models.

April 6, 2009China's manufacturing sector,over the past 15 years, has been fueled by competition. Increasing local and foreign demand has proliferated opportunities for profit creation. More recently, cost and quality have influenced inter-industry rivalry. Where there was once a buyer's market, today many suppliers have gained the position of power. These factors, along with increasing industry consolidation, have greatly influenced upstream production capacity.

Capacity is a dynamic component of short-term China supply chain development. Undercapacity can create throughput bottlenecks, which lengthen lead times further downstream. Overcapacity can create pricing wars leading to inferior material substitutes. With today's realities forcing internal cost analysis, companies must concern themselves with securing capacity to actively decrease indirect costs.

Buyer versus Supplier Market

To assess the dynamics of an industry supply chain, buyer and supplier characteristics should be considered. This includes supplier and buyer knowledge and production capacity. In 1990, for example, few suppliers in China knew the market price of products in the US. Suppliers based their pricing on cost alone. At this time, the knowledge and price gaps were large. The China sourcing gold rush began.

Today, the environment has changed almost overnight. With the expansion of information through the internet, finished product pricing is readily available. Few procurement directors or supplier managers outside China however follow input material market pricing in China. The power gained through knowledge has transferred, as has the percentage of supply chain profit.

Production capacity has faced a similar shift. Even as late as 1995, few companies and industries outside of labor intensive ones were supplying large customers directly from China. Turning to 2009, China is now at the center of global manufacturing. With local and export demand growth, production capacity requirements have increased exponentially. As a result, supplier power has again continued to rise.

Competitive Dynamics with Undercapacity

In specific industries, such as hand painted ceramics or air field lighting systems, a noticeable production capacity gap exists. These industries are confronted with a difficult dilemma, undercapacity of Chinese production with a requirement for "China pricing." In a few cases, decreasing profit margins have driven once highly competitive industries to consolidate. In others, manufacturers are exiting due to poor performance. These influences are becoming systematic constraints in upstream operations, leading to demand fulfillment and lead time planning headaches. The question is then, how to reduce the costs and risk associated with upstream production capacity bottlenecks?

The theory of constraints (TOC) drum-buffer-rope model sheds some light. As we learn, it is essential to protect the bottleneck by ensuring buffer inventory is maintained immediately before the constraint. Following the constraint, it is important to leave adequate space or lead time. With manufacturing operations in China, inventory and production planning responsibility is often left solely to suppliers. As a result, inventory placement is inefficiently managed often requiring expedited delivery. Total cost models including these associated indirect costs often remain unaddressed. This would include later stage production downtime and delays.

One potential solution is the purchasing capacity model. Purchasing capacity instead of product ensures production capacity is available when required. Another potential solution is as a customer, securing raw material. This strategy can serve to mitigate price fluctuation and quality variability. These examples of coordinated planning help buffer the constraint, here production capacity, to ensure the utilization rate remains high.

One company that has effectively reduced upstream capacity risks is IKEA. In 2005, IKEA established a global supply chain management division. Traditional responsibilities transitioned, where technicians became instructors to suppliers and procurement managers were accountable for logistics and in-transit inventory. From 2000 to 2005, average operational costs reduced 46% while sales grew by 345%. Instead of increasing pressure on suppliers due to capacity bottlenecks, IKEA sought ways to improve supplier operations by increasing throughput and supply chain profitability.

Competitive Dynamics with Overcapacity

In contrast, overcapacity is common for many industries in China. Overcapacity is primarily created by low barriers to entry, highly fragmented markets and easily transferred technology. Large volume production with low product differentiation also plays an important role. Examples would be input materials such as paint, bolts and MDF distribution.

With overcapacity, the cost-flexibility balance becomes critical. Here, inventory placement and lead time scheduling will directly affect costs, but differently. The supply chain architecture for industries with overcapacity has a higher number of variables. Multiple material flow choices offset inherent risks with inventory positioning and supplier order scheduling. Commonly supply chain models that do not account for such flexibility lack a comprehensive view of total cost reduction strategies. Overcapacity also allows for greater inventory placement upstream through work-in-process inventory.

This reality is exemplified in numerous industries in China. Take for example, the automotive industry. Overcapacity has resulted from increased foreign demand, and the expansion of local consumer automotive purchasing, 21.84% in 2007. More recently however, this growth has become a root cause of excess supply chain costs. These systemic influences reach a global scale due to China sourcing for automotive components. With the current downturn in global demand, numerous suppliers are afflicted with excess inventory and hence assembly lines in China remain idle.

As the world continues to face the economic downturn, upstream capacity and cost considerations will remain an important factor. The key to analyzing upstream capacity is derived from current state mapping of the industry supply chain design. As many companies are realizing, without an involved perspective of China upstream operations, it is increasingly difficult to manage many cost components such as inventory holding, throughput, and as a result, service level. As we see time and again, when you buy a product, you buy the supply chain. The effects of early inefficiencies often manifest themselves throughout the entire supply chain model.

Bradley A. Feuling is the CEO of Kong and Allan based in Shanghai, China. Kong and Allan is a consulting firm specializing in supply chain operations and global corporate expansion. Research assistance provided by Xia Jun, an Associate Consultant at Kong and Allan China. http://www.kongandallan.com

Why IBM Needs Sun

Monday, April 06, 2009

Why IBM Needs Sun

Insiders at both companies say that it has much to do with Sun's intellectual property.

By Robert X. Cringley

After weeks of private negotiations, IBM was poised to buy rival Sun Microsystems for a reported $7 billion. Negotiations apparently broke down on Sunday when Sun's board rejected a reduced offer. But beyond allowing IBM to reclaim from Hewlett-Packard the title of world's biggest computer company, why would the company even want Sun, a sprawling Unix vendor that has struggled for years to even show a profit? The answer, according to insiders at both companies, lies in Sun's intellectual property.

Not only would Sun be IBM's largest acquisition ever, but the buy is out of character for the staid mainframe company, which has for several years worked to streamline itself and become a very profitable vendor of computer and Internet services. But sometimes a deal comes along that's simply too good to pass up. Despite years of losses, Sun has continued to spend an average of $3 billion per year on research and development. Sun also has a huge patent portfolio that might have unique value to IBM, the world's largest and arguably most aggressive licensers of technical IP, according to experts in IP licensing.

The parts of Sun that have most value to IBM are the Java programming language, Solaris (Sun's version of the Unix operating system), the MySQL open-source database, and certain virtualization and cloud-computing components.

IBM has already made a huge commitment to Java, a language that it doesn't control. Now almost 15 years old, Java has come into its own as a platform for mobile computing and server applications. "As a high-level language, Java is ideal for applications that are intended to run for weeks and months at a time without having to restart," says Paul Tyma, former senior developer of server software at Google and now chief technical officer at Home-Account, an Internet startup in San Francisco. "Compared to older languages like C++, Java is ideal for large enterprise applications," he adds. "The longer it runs, the better it runs."

Java is also the dominant development environment for applications running on more than one billion mobile phones--an area of computing that is not only growing like crazy, but, with mobile devices being replaced every 18 months, evolving like crazy. Now IBM will have a crucial piece of that new business.

IBM already has its own version of the Unix operating system, called AIX, but Sun's Solaris has larger market share and runs on a broader selection of hardware than AIX, which is aimed primarily at very big systems. But there's an additional attraction to Solaris, one that is critical primarily for legal reasons.

For years, IBM has been dogged by a lawsuit from the tiny SCO Group of Lindon, UT. SCO holds certain rights to the UNIX operating system acquired from Novell and before that AT&T, and the company claims that IBM is responsible for allowing certain SCO UNIX code (and possibly AIX code) to be inserted in Linux, an open-source version of Unix that IBM has been involved in developing. While IBM has the upper hand in the SCO suit, which has been ongoing since 2003, it has become clear that some code commingling has taken place, which could hurt future copyright and intellectual-property claims over software developed for Linux and AIX. Sun's Solaris, however, has taken an entirely separate development path and is free of any such taint. In other words, its DNA is clean. Given the years of SCO litigation, this has value for IBM.

Both Sun and IBM are major players in the Unix workstation market. If there are antitrust concerns about this merger they will probably center on the intersection of those hardware businesses.

IBM already owns the DB2 SQL database, while Sun paid $1.1 billion last year to buy MySQL, the most popular open-source SQL database around. Owning this would potentially give IBM new advantages at both ends of the market and help the company compete better against Oracle Corporation, its chief database rival.

Cloud computing, in which applications run in data centers on hundreds or thousands of servers, is an important new computing market. Cloud computing is dependent on virtualization--software that allows several operating systems to run at one time on servers used in the cloud. IBM has recently made several significant announcements about cloud computing and server virtualization. But announcements alone aren't enough, according to sources inside IBM. Sun has virtualization and cloud-computing software that will allow IBM to deliver what it has promised.

No wonder IBM is so interested in Sun.

The Best Computer Interfaces: Past, Present, and Future

Monday, April 06, 2009

The Best Computer Interfaces: Past, Present, and Future

Say goodbye to the mouse and hello to augmented reality, voice recognition, and geospatial tracking.

By Duncan Graham-Rowe

| ||

| Multitouch screen: Microsoft’s Surface is an example of a multitouch screen. Credit: Microsoft | ||

| Multimedia

|

Computer scientists from around the world will gather in Boston this week at Computer-Human Interaction 2009 to discuss the latest developments in computer interfaces. To coincide with the event, we present a roundup of the coolest computer interfaces past, present, and future.

The Command Line

The granddaddy of all computer interfaces is the command line, which surfaced as a more effective way to control computers in the 1950s. Previously, commands had to be fed into a computer in batches, usually via a punch card or paper tape. Teletype machines, which were normally used for telegraph transmissions, were adapted as a way for users to change commands partway through a process, and receive feedback from a computer in near real time.

Video display units allowed command line information to be displayed more rapidly. The VT100, a video terminal released by Digital Equipment Corporation (DEC) in 1978, is still emulated by some modern operating systems as a way to display the command line.

Graphical user interfaces, which emerged commercially in the 1980s, made computers much easier for most people to use, but the command line still offers substantial power and flexibility for expert users.

The Mouse

Nowadays, it's hard to imagine a desktop computer without its iconic sidekick: the mouse.

Developed 41 years ago by Douglas Engelbart at the Stanford Research Institute, in California, the mouse is inextricably linked to the development of the modern computer and also played a crucial role in the rise of the graphic user interface. Engelbart demonstrated the mouse, along with several other key innovations, including hypertext and shared-screen collaboration, at an event in San Francisco in 1968.

Early computer mouses came in a variety of shapes and forms, many of which would be almost unrecognizable today. However, by the time mouses became commercially available in the 1980s, the mold was set. Three decades on and despite a few modifications (including the loss of its tail), the mouse remains relatively unchanged. That's not to say that companies haven't tried adding all manner of enhancements, including a mini joystick and an air ventilator to keep your hand sweat-free and cool.

Logitech alone has now sold more than a billion of these devices, but some believe that the mouse is on its last legs. The rise of other, more intuitive interfaces may finally loosen the mouse's grip on us.

The Touchpad

Despite stiff competition from track balls and button joysticks, the touchpad has emerged as the most popular interface for laptop computers.

With most touchpads, a user's finger is sensed by detecting disruptions to an electric field caused by the finger's natural capacitance. It's a principle that was employed as far back as 1953 by Canadian pioneer of electronic music Hugh Le Caine, to control the timbre of the sounds produced by his early synthesizer, dubbed the Sackbut.

The touchpad is also important as a precursor to the touch-screen interface. And many touchpads now feature multitouch capabilities, expanding the range of possible uses. The first multitouch touchpad for a computer was demonstrated back in 1984, by Bill Buxton, then a professor of computer design and interaction at the University of Toronto and now also principle researcher at Microsoft.

The Multitouch Screen

Mention touch screen computers, and most people will think of Apple's iPhone or Microsoft's Surface. In truth, the technology is already a quarter of a century old, having debuted in the HP-150 computer in 1983. Long before desktop computers became common, basic touch screens were used in ATMs to allow customers, who were largely computer illiterate, to use computers without much training.

However, it's fair to say that Apple's iPhone has helped revive the potential of the approach with its multitouch screen. Several cell-phone manufacturers now offer multitouch devices, and both Windows 7 and future versions of Apple's Macbook are expected to do the same. Various techniques can enable multitouch screens: capacitive sensing, infrared, surface acoustic waves, and, more recently, pressure sensing.

With this renaissance, we can expect a whole new lexicon of gestures designed to make it easier to manipulate data and call up commands. In fact, one challenge may be finding means to reproduce existing commands in an intuitive way, says August de los Reyes, a user-experience researcher who works on Microsoft's Surface.Gesture Sensing

Compact magnetometers, accelerometers, and gyroscopes make it possible to track the movement of a device. Using both Nintendo's Wii controller and the iPhone, users can control games and applications by physically maneuvering each device through the air. Similarly, it's possible to pause and play music on Nokia's 6600 cell phone simply by tapping the device twice.

New mobile applications are also starting to tap into this trend. Shut Up, for example, lets Nokia users silence their phone by simply turning it face down. Another app, called nAlertMe, uses a 3-D gestural passcode to prevent the device from being stolen. The handset will sound a shrill alarm if the user doesn't move the device in a predefined pattern in midair to switch it on.

The next step in gesture recognition is to enable computers to better recognize hand and body movements visually. Sony's Eye showed that simple movements can be recognized relatively easily. Tracking more complicated 3-D movements in irregular lighting is more difficult, however. Startups, including Xtr3D, based in Israel, and Soft Kinetic, based in Belgium, are developing computer vision software that uses infrared for whole-body-sensing gaming applications.

Oblong, a startup based in Los Angeles, has developed a "spatial operating system" that recognizes gestural commands, provided the user wears a pair of special gloves.

Force Feedback

A field of research called haptics explores ways that technology can manipulate our sense of touch. Some game controllers already vibrate with an on-screen impact, and similarly, some cell phones shake when switched to silent.

More specialized haptic controllers include the PHANTOM, made by SensAble, based in Woburn, MA. These devices are already used for 3-D design and medical training--for example, allowing a surgeon to practice a complex procedure using a simulation that not only looks, but also feels, realistic.

Haptics could soon add another dimension to touch screens too: by better simulating the feeling of clicking a button when an icon is touched. Vincent Hayward, a leading expert in the field, at McGill University, in Montreal, Canada, has demonstrated how to generate different sensations associated with different icons on a "haptic button". In the long term, Hayward believes that it will even be possible to use haptics to simulate the sensation of textures on a screen.

Voice Recognition

Speech recognition has always struggled to shake off a reputation for being sluggish, awkward, and, all too often, inaccurate. The technology has only really taken off in specialist areas where a constrained and narrow subset of language is employed or where users are willing to invest the time needed to train a system to recognize their voice.

This is now changing. As computers become more powerful and parsing algorithms smarter, speech recognition will continue to improve, says Robert Weidmen, VP of marketing for Nuance, the firm that makes Dragon Naturally Speaking.

Last year, Google launched a voice search app for the iPhone, allowing users to search without pressing any buttons. Another iPhone application, called Vlingo, can be used to control the device in other ways: in addition to searching, a user can dictate text messages and e-mails, or update his or her status on Facebook with a few simple commands. In the past, the challenge has been adding enough processing power for a cell phone. Now, however, faster data-transfer speeds mean that it's possible to use remote servers to seamlessly handle the number crunching required.

Augmented Reality

An exciting emerging interface is augmented reality, an approach that fuses virtual information with the real world.

The earliest augmented-reality interfaces required complex and bulky motion-sensing and computer-graphics equipment. More recently, cell phones featuring powerful processing chips and sensors have to bring the technology within the reach of ordinary users.

Examples of mobile augmented reality include Nokia's Mobile Augmented Reality Application (MARA) and Wikitude, an application developed for Google's Android phone operating system. Both allow a user to view the real world through a camera screen with virtual annotations and tags overlaid on top. With MARA, this virtual data is harvested from the points of interest stored in the NavTeq satellite navigation application. Wikitude, as the name implies, gleans its data from Wikipedia.

These applications work by monitoring data from an arsenal of sensors: GPS receivers provide precise positioning information, digital compasses determine which way the device is pointing, and magnetometers or accelerometers calculate its orientation. A project called Nokia Image Space takes this a step further by allowing people to store experiences--images, video, sounds--in a particular place so that other people can retrieve them at the same spot.

Spatial Interfaces

In addition to enabling augmented reality, the GPS receivers now found in many phones can track people geographically. This is spawning a range of new games and applications that let you use your location as a form of input.

Google's Latitude, for example, lets users show their position on a map by installing software on a GPS-enabled cell phone. As of October 2008, some 3,000 iPhone apps were already location aware. One such iPhone application is iNap, which is designed to monitor a person's position and wake her up before she misses her train or bus stop. The idea for it came after Jelle Prins, of Dutch software development company Moop, was worried about missing his stop on the way to the airport. The app can connect to a popular train-scheduling program used in the Netherlands and automatically identify your stops based on your previous travel routines.

SafetyNet, a location-aware application developed for Google's Android platform, lets user define parts of town that they deem to be generally unsafe. If they accidentally wander into one of these no-go areas, the program becomes active and will sound an alarm and automatically call 911 on speakerphone in response to a quick shake.

Brain-Computer Interfaces

Perhaps the ultimate computer interface, and one that remains some way off, is mind control.

Surgical implants or electroencephalogram (EEG) sensors can be used to monitor the brain activity of people with severe forms of paralysis. With training, this technology can allow "locked in" patients to control a computer cursor to spell out messages or steer a wheelchair.

Some companies hope to bring the same kind of brain-computer interface (BCI) technology to the mainstream. Last month, Neurosky, based in San Jose, CA, announced the launch of its Bluetooth gaming headset designed to monitor simple EEG activity. The idea is that gamers can gain extra powers depending on how calm they are.

Beyond gaming, BCI technology could perhaps be used to help relieve stress and information overload. A BCI project called the Cognitive Cockpit (CogPit) uses EEG information in an attempt to reduce the information overload experienced by jet pilots.

The project, which was formerly funded by the U.S. government's Defense Advanced Research Projects Agency (DARPA), is designed to discern when the pilot is being overloaded and manage the way that information is fed to him. For example, if he is already verbally communicating with base, it may be more appropriate to warn him of an incoming threat using visual means rather than through an audible alert. "By estimating their cognitive state from one moment to the next, we should be able to optimize the flow of information to them," says Blair Dickson, a researcher on the project with U.K. defense-technology company Qinetiq.